Deepseek R1 has been going wild for quite some time because of the cost of running it and the accuracy it can provide. But the model is owned by a Chinese company and we don't wanna send our data to China. We will take a technical look at how we can run the Deepseek R1 model locally on your system.

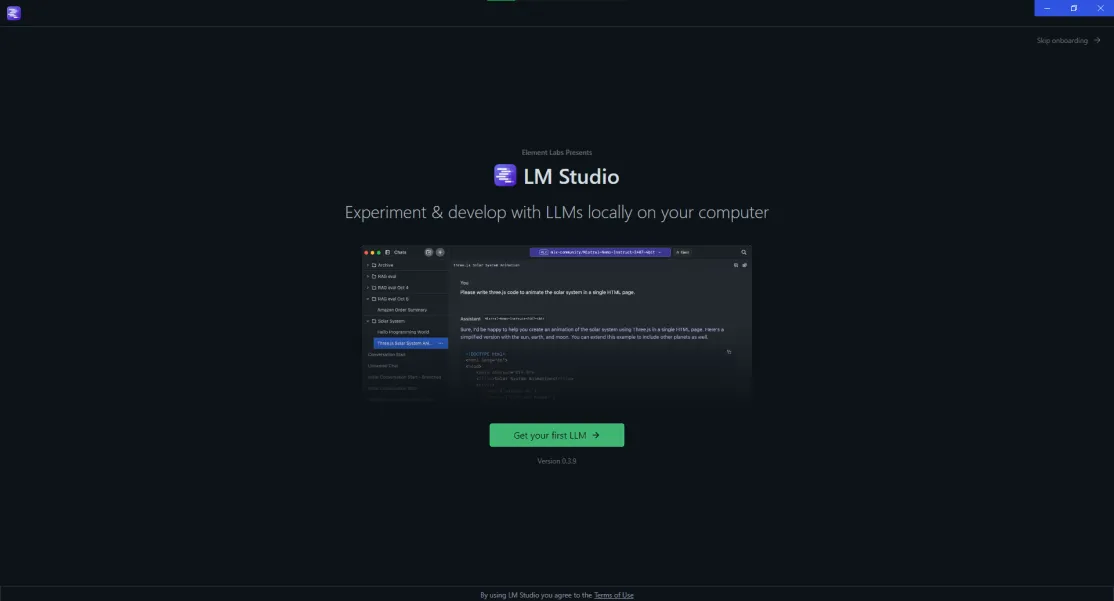

To get started download the LM Studio and install it. It is similar to Ollama which helps you run LLM models locally. Ollama is more of a CLI-based access but with LMstudio you will get the option to chat with the model through a UI just like we do with ChatGPT.

What is LM Studio?

LM Studio is a desktop application that can run on mac os, windows, and Linux. With the help of LM Studio, the user can run Large Language Models (LLMs) on their local machine. It provides a user-friendly interface for managing models and communicating with the model. All of this can be done without using any coding knowledge!

What is DeepSeek R1?

DeepSeek R1 is a state-of-the-art Large Language Model known for its strong performance across a variety of tasks, including text generation, question answering, and code completion when compared to the other models in the market. As this model is open source, we can run it locally and modify it if we can. By running it locally on your machine we can save cost and privacy compared to the Deepseek in web UI. Moreover, Deepseek is going through a DDoS attack which has made their website unusable.

Prerequisites

- A computer running Windows, macOS, or Linux.

- At least 16GB of RAM is recommended, 32GB+ for optimal performance

- At least 10GB+ depending on the model you choose

Setting up LM Studio

- Go to the LM Studio website and download the version as per your operating system.

- Install the app by following the installation steps.

- Launch LM Studio

- Click on the "Search" icon 4th option on the left sidebar.

- Type "DeepSeek R1" into the search bar and download the modal that best suits your PC configuration. After the model is downloaded load the modal in the app by pressing the load modal button that will appear after the modal is downloaded successfully. Note - Choose the right model as per your machine's config else the response time for the model will be very slow.

- After that, you can chat with the model like a normal chat. The response time will vary as per your system specs

What have we done just now?

The Deepseek R1 model is open source which means the model is free for use and anyone can use the model for free. As these models require GPU power to do the work their price is fairly high and to enhance the user experience these models usually save user data on their servers. If we will be using the model online through any platform then we will have to pay money and also send them our data. To be safe from both things we have just downloaded the model and run the model on our machine using our own machine's GPU and CPU power and the data of the app will be saved on our machine itself so we won't have to worry about privacy too.

If you are new to thing AI thing make sure to read some of our old blogs too.

If you want access to this all over the internet then either you can set up a VPN to your home network and access it or you can host the save on the cloud.

You can also tweak this model a little bit like maintaining the temperature of the content this model is giving out by adjusting it in the LM Studio.

Before loading any model just remember the results of the model running on your machine and the model provided by the company will have different results because of the low parameters. The company runs the highest parameter model on the best hardware while the model you might be running might not be that much capable. The lesser the parameter the more dumb the model will sound.